Table of contents

- What is Containerization?

- Why Podman vs Docker?

- Containers vs Virtual Machines

- Why are containers important?

- What is Docker?

- How does Docker work?

- What is Podman?

- What are pods?

- Running a Redis server inside a container using Podman

- Podman vs Docker Comparison

- Architecture: Podman has a Daemonless architecture

- Root privileges

- Rootless Execution

- Security

- Building images

- Docker Swarm and docker-compose

- All in one vs modular

- Is Podman a replacement for Docker, or can they work together?

- How to migrate from Docker to Podman?

- Running a Node.js container with Podman

- Conclusion

Docker and Podman are great container management engines and serve the same purpose in building, running, and managing containers.

Docker has been in the containerization market for quite a while. It has proved its worth and increased its job market demand. It was first developed by Docker Inc. in 2013 and has been used by some of the biggest names in technology, such as Google and Facebook, thus making it the most popular container engine out there.

Now that containers have become a lot more popular and are being used almost everywhere, other tools like Podman show up to make this even better and solve some specific problems we face with containerization.

In this Podman vs Docker comparison blog, you will learn about both Podman and Docker. Moreover, you will get a much better understanding of both container engines. At the end of the blog post, you will be able to decide which container engine suits you the best.

Chances are you are using Docker currently, so I will also explain how to migrate from Docker to Podman without having any issues in the later section of this blog on Podman vs Docker.

So, let’s get started.

What is Containerization?

Containerization is a very efficient method of virtualization that is available out there. It makes it much easier for developers to test, build and deploy large-scale applications.

Containers and Virtual machines are similar, but they’re not the same. Since they are so similar, some people tend to confuse them because they do the same thing but differently.

Why Podman vs Docker?

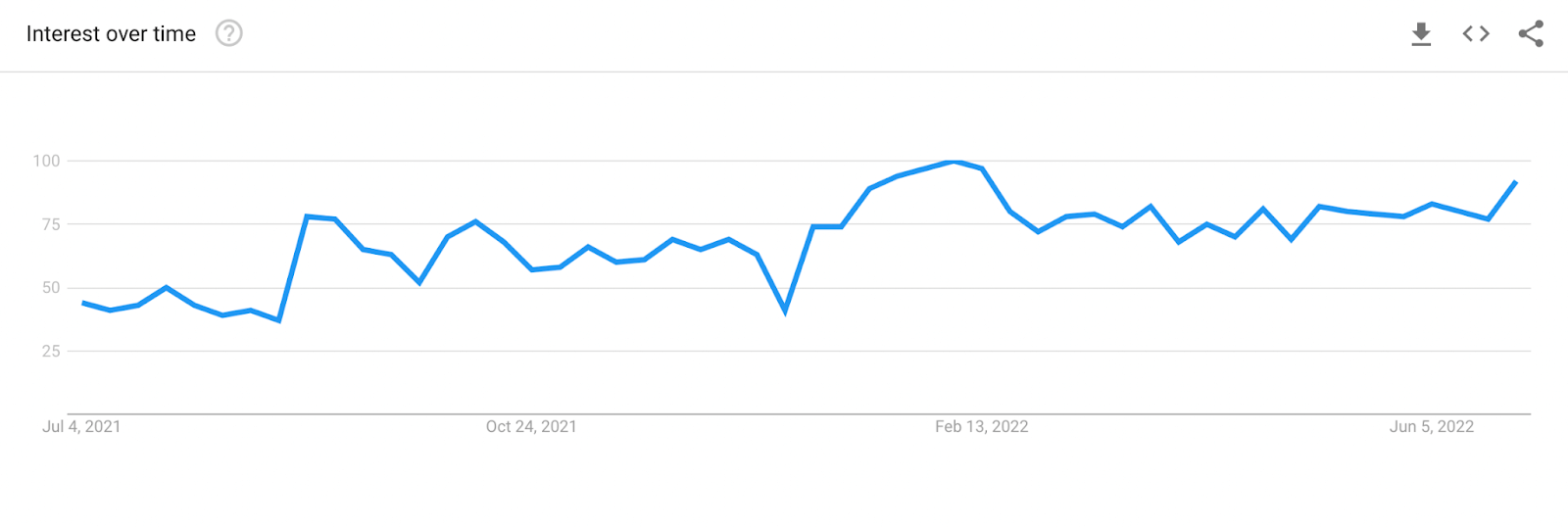

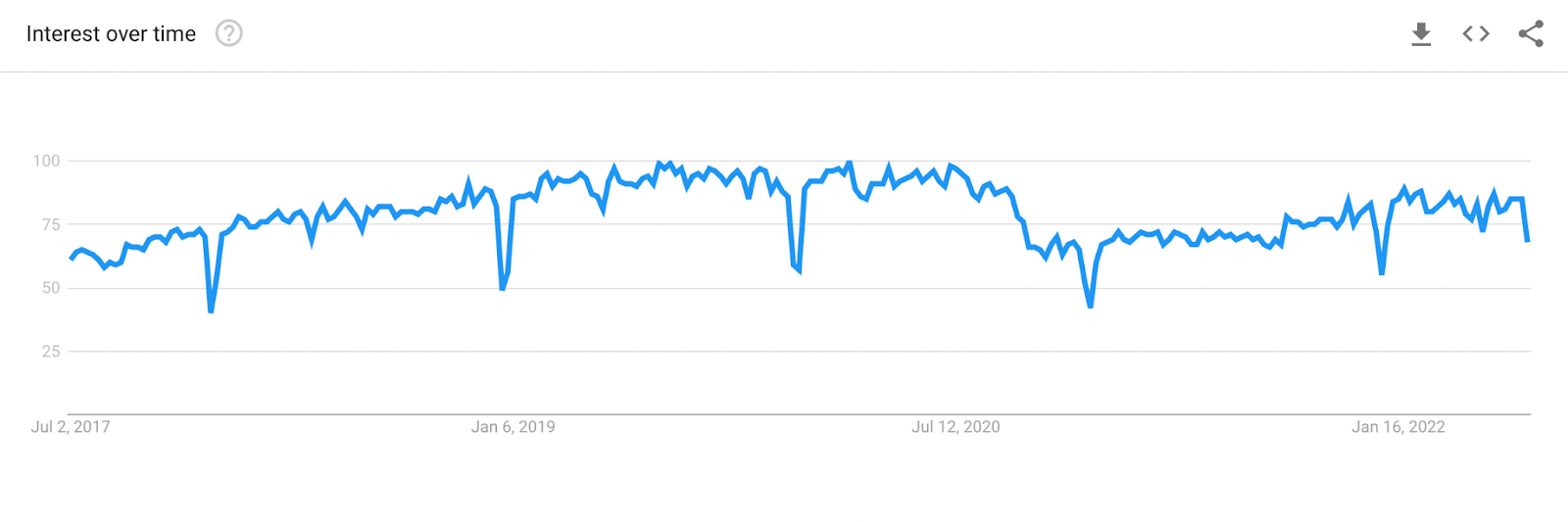

If we look at Google trends, we see that the search interest for Podman is on the rise and all-time increasing. We will likely see Podman become more popular in the next few years.

Docker is still a leading player in the field, and I don’t think it is going anywhere anytime soon, but the charts tell us that the overall interest in Docker is decreasing.

Either developer are losing interest in Docker, or they have migrated to another software that could do their jobs better than Docker.

Before getting into the Podman vs Docker differences, let’s start by understanding what a container is. Some people tend to confuse the two because they both do the same thing (virtualization), but they’re quite different.

Containers vs Virtual Machines

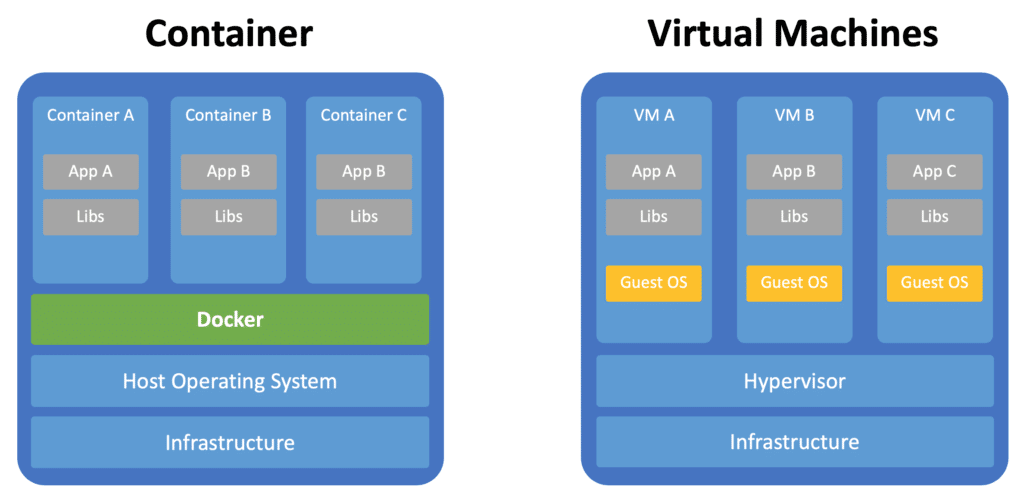

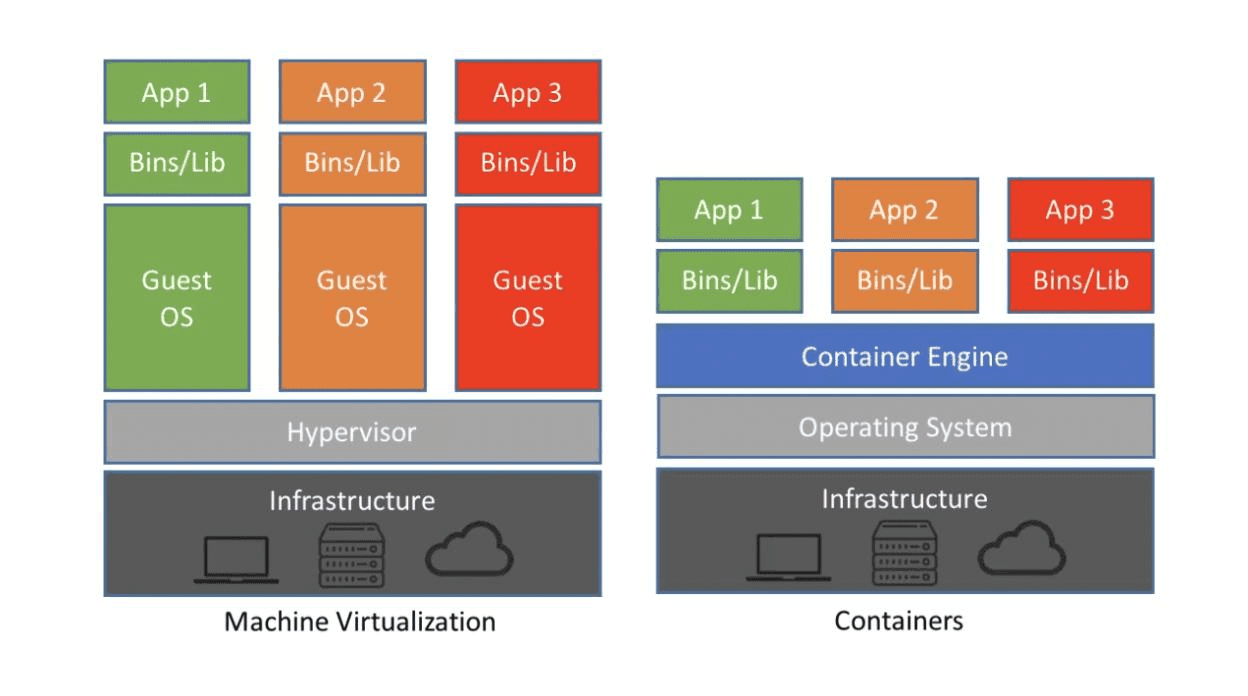

In Virtual Machines (VMs), the system’s resources are distributed between multiple virtual machines. The VMs run separately and can have different operating systems in them.

Containers, on the other hand, only virtualize software layers above the operating system level, which means you can have multiple virtualized environments with only one operating system. To learn more about the differences, you can read through this blog, which discusses Containers vs Virtual Machines.

Containerization is the packaging of software code with just the OS libraries and dependencies required to run the code to create a single lightweight executable, called a container.

A comprehensive User Acceptance Testing (UAT) tutorial that covers what User Acceptance Testing is, its importance, benefits, and how to perform it with real-time examples.

Why are containers important?

Containers play a considerable role in deploying applications, especially microservices. Microservices are a few little services that communicate, send, and receive data from and to each other.

As per the report, Netflix uses over 1,000 microservices now. Each deployed application controls a specific aspect of the colossal Netflix operation. For example, a microservice is only responsible for determining your subscription status to provide content relevant to that subscription tier.

Microservices allow teams to work on different parts of their applications separately without interfering with another team’s work. They are flexible, maintainable, and easily adaptable.

The microservices design patterns have helped developers to develop, scale, and maintain their projects efficiently. However, when it comes to deployment, they might face a few challenges, especially in large-scale applications.

If you don’t use containers, you will have to write a bunch of instructions to your DevOps team to run the code on their side. This increases the number of errors that could occur in the application.

To learn more about microservices, you can refer to this blog on how to test a microservice architecture application.

Challenges of not using Containers

If the DevOps team makes a mistake in following your instructions, they will not be able to run the code on their side. So they will get back to you, and you’ll have to find that little mistake.

For example: let’s say you are using MongoDB version 4.0, but for production, there was a mistake, and version 4.1 has been installed. One of your microservices is not compatible with that specific version.

These errors are often introduced in large-scale applications and can be challenging to debug.

As your application grows, it requires more dependencies and microservices, which will be quite a headache to re-deploy manually every time something new is added.

Containerization makes this so much easier. You don’t have to give instructions to your DevOps team as the instructions are written in a Dockerfile. The container engine will automatically download and install every dependency that your code requires to run.

FROM node:16

# Create app directory

WORKDIR /usr/src/app

# Install app dependencies

# A wildcard is used to ensure both package.json AND package-lock.json are copied

# where available (npm@5+)

COPY package*.json ./

RUN npm install

# If you are building your code for production

# RUN npm ci --only=production

# Bundle app source

COPY . .

EXPOSE 8080

CMD [ "node", "server.js" ]

Docker will read the file and instructions and download the dependencies for that specific application, ending with a specific command to run the application. In this case, we are pulling a specific version of the official NodeJs Docker image.

These image repositories could depend on other repositories and dependencies, and they also can rely on other dependencies on and on. Docker will handle all that hard work in the background when it builds and runs containers.

Since containers use only high-level software, it makes them lightweight and can be created, modified, and deleted with ease.

Most container runtime systems also have a robust ecosystem. Many public repositories of container images are ready to be downloaded and executed.

For example, you can download the latest version of Selenium from DockerHub and run it inside a Docker container with just one single command.

docker pull selenium/standalone-docker

docker run selenium/standalone-docker

This will download the official Selenium Docker image from DockerHub and all of the required dependencies for it. Then Selenium will run inside of the Docker container.

Today, almost every large company uses containerization to test, build, and deploy its applications. Containers have proved their importance over the last few years, especially Docker.

What is Docker?

Docker is a Platform as a Service (PAAS) product that uses operating-system-level virtualization in small, lightweight packages called containers.

For starters, PaaS is a complete development and cloud-hosted platform in the cloud. It allows businesses and developers to host, build, and deploy applications.

Docker is also open-source and helps developers to build, deploy, run and manage containerized applications. It uses a REST API running in the background to listen to requests and perform operations accordingly. This process is called the Docker daemon (or dockerd).

Docker daemon is a background process responsible for managing all containers on a single host. It can handle all Docker images, containers, networks, storage, etc.

Docker uses the containerd Daemon that listens to REST API requests to manage most of the work that Docker does. For example, managing images, managing containers, volumes, and networks. Containerd listens on a Unix socket and exposes gRPC endpoints. It handles all the low-level container management tasks, storage, image distribution, network attachment, etc.

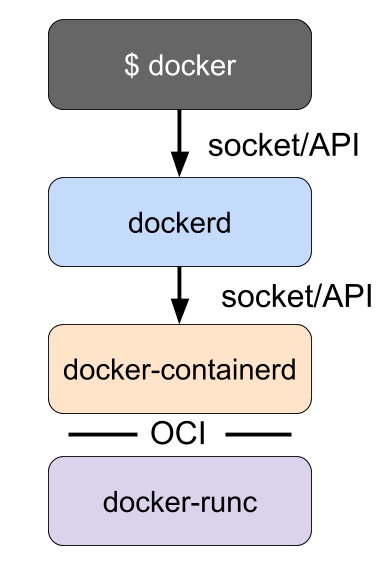

How does Docker work?

The containerd daemon will pull the container images from the container registries. Then it turns over the process of creating the container to a low-level runtime named runc.

runc is a CLI tool for running containers on Linux according to the OCI specification.

OCI is a collaborative project hosted under the Linux Foundation and designed to establish standards for containers. Container engines like Docker, CRI-O, and containerd rely on the OCI compliant to interface with the operating system and create the running containers.

It is designed to be used by higher-level container software. Hence, the process of running a container goes from running it through the Docker daemon, containerd, and then run.

- Docker Image

A Docker image is like a template that acts as a set of instructions to build a Docker container. A Docker image contains application code, libraries, tools, dependencies, and other files needed to run the application.

- Docker Volume

Let’s say you have a Docker container with a MongoDB database inside it. Usually, this container saves every bit of data inside the container itself.

The problem with this is that if you want to delete the container for whatever reason, you will lose all of the data that you have accumulated.

With Docker volumes, you can save the necessary information that you need in the host machine. They are preferred where you want the data to persist in docker containers and services.

- Docker Network

A Docker network helps attach containers that can communicate with each other. Hence, Docker is not just the software to build and run containers. It is also a cloud platform to upload and download your container images to the cloud.

With Docker, you can create, build, and run containers compatible with any operating system. In other words, if you have Docker on your system and your code runs on your machine, it is guaranteed that this code will run on another device if they have Docker installed.

A quick summary about Docker:

Docker uses a daemon which is a background process listening to API requests to manage the containers accordingly

Docker lets you build and run super lightweight containers

Docker is a PAAS based on multiple software, including a cloud-hosted registry to share and download Docker images called DockerHub.

What is Podman?

Podman is a similar container engine to Docker. It is also used for developing, managing, and running OCI containers. Podman can manage the entire container ecosystem like pods, containers, images, and container volumes using a library libpod.

What are pods?

One of the key features of Podman is that it allows you to create pods. A pod is an organizational unit for containers. Pods are an essential part of the Kubernetes container orchestration framework.

You can use Podman to create manifest files that describe pods in a declarative format. Kubernetes can consume these manifest files written in YAML.

Unlike Docker, containers in Podman can be run as root or non-root users. We will discuss this Podman vs Docker difference in more detail in the later section of this blog on Podman vs Docker.

Podman does not rely on a daemon to develop, manage or run containers. Since Podman does not have a daemon to manage containers, Podman uses another service manager to manage all of the services and support running containers in the background called Systemd.

Systemd creates control units for existing containers or generates new ones, with Systemd vendors can install, run, and manage their container applications since most are now exclusively packaged and delivered this way.

Podman, with the help of Buildah, can build images without using a daemon. Buildah is a tool for building OCI-compatible images through a lower-level core utils interface.

Just like Docker has a CLI since images created by Docker and Podman are compatible with the OCI standard. Podman can push and pull from container registries such as the Docker Hub and Quay.io.

If you are already familiar with Docker, you can easily migrate from Docker to Podman (more on this later). You can also alias Podman to docker without having any problems alias docker=podman. This means you can use the same commands with Podman as with Docker.

Podman, just like Docker, relies on an OCI-compliant like RunC to interface with the operating system and create the running containers. Hence it will make Podman very similar to other container engines like Docker.

Podman also uses REST API that Podman provides to manage containers; this REST API is only available on Linux. To interact with this REST API, Podman has a client which is available on all of the major operating systems Windows, macOS, and Linux.

If the Podman REST API is only available on Linux, how can we use it in Windows or macOS?

Even though containers are Linux, Podman also runs on Mac and Windows. It provides a native Podman CLI and embeds a guest Linux system to launch the containers.

This guest is also called a Podman machine and can be managed by using the podman machine command.

A quick summary about Podman:

Podman helps you find containers on DockerHub or quay.io or an internal registry server.

Easily runs containers from pre-built container images.

Builds new layers or containers with a few tweaks.

You can easily share your container images anywhere you want with just one command podman push.

Running a Redis server inside a container using Podman

Running containers with Podman is pretty easy and similar to Docker. To do that, run the following command:

podman run redis

Podman will run a Redis server based on the official Redis image inside of a container. The container will be run in detached mode declared by -d and will be named Redis as specified with —-name.

The container will expose the ports and attach it to the same port on the host machine, which is the default Redis port as specified with -p 6379:6379.

After you run the command, Podman will check if the Redis image already exists in the local machine. If it does, it will build a container based on that image. If it doesn’t, it will look for that image from DockerHub and download the latest version.

A comprehensive Exploratory Testing tutorial that covers what Exploratory Testing is, its importance, benefits, and how to perform it with real-time examples.

Podman vs Docker Comparison

Generally, Podman and Docker do the same thing. However, there are some differences between the two container engines, Podman vs Docker.

Here are the main differences between Podman vs Docker:

Architecture: Podman has a Daemonless architecture

Docker daemon is a background process responsible for managing all containers on a single host. It can handle all Docker images, containers, networks, storage, etc. Docker daemon uses a REST API to listen to requests and perform operations accordingly.

So, Docker needs this daemon to run in the background to manage, create, run, and build containers. Docker has a client-server logic mediated by the Docker daemon.

Podman, on the other hand, does not need a daemon. It has a (daemonless architecture), which helps users to start running containers (rootless). In other words, Podman does not require root privileges to manage containers.

Root privileges

Since Docker needs a daemon to manage its containers, it will need root permission to run the processes.

As Podman does not have a daemon, it has a (daemonless architecture). It does not require root privileges for its containers.

Rootless Execution

At first, when Docker came out, you could not run Docker without root permission. However, the rootless mode was introduced in Docker at v19.03 and graduated from experimental in Docker Engine v20.10.

However, Docker rootless doesn’t come out right out of the box. Some configurations and third-party packages should first be installed on the host before installing Docker.

So, yes, you can run Docker and Podman as root. But it is good to know that Podman introduced running containers on rootless, which also comes with a few limitations.

Limitations of Docker rootless

Rootless mode on Docker is not perfect, and some issues come with running containers with Docker rootless:

Not being able to run containers on privileged ports, which are all of the ports below 1024. Otherwise, they will fail to run.

Only the following storage drivers are supported:

overlay2 (only if running with kernel 5.11 or later or Ubuntu-flavored kernel).

fuse-overlayfs (only if running with kernel 4.18 or later, and fuse-overlayfs is installed).

btrfs (only if running with kernel 4.18 or later, or ~/.local/share/docker is mounted with user_subvol_rm_allowed mount option).

Vfs.

Cgroup is supported only when running with cgroup v2 and systemd.

The following features are not supported:

AppArmor

Checkpoint

Overlay network

Exposing SCTP ports

To use the ping command.

IPAddress shown in docker inspect and is namespaced inside RootlessKit’s network namespace. This means the IP address is not reachable from the host without nsenter -ing into the network namespace.

Host network ( docker run --net=host ) is also namespaced inside RootlessKit.

NFS mounts as the docker “data-root” is not supported. This limitation is not specific to rootless mode.

Security

Does Podman have higher security than Docker? Earlier, we looked at how Podman can run rootless because it has a (daemonless architecture), and Docker can not run rootless because it requires a daemon to run and manage the containers while comparing Podman vs Docker.

But why is this important? Why not having a daemon and being able to run containers without root privileges is essential? The answer is that we do not give root privileges to applications that we don’t trust, and we do trust our containers and applications.

But that’s not the case. Let’s say an attacker has found a way to access one of your containers! Since Docker can only run with root privileges, any malicious actor (i.e., attacker) can take wrong actions on your server with those root privileges.

With Podman, if an attacker gets access to your container, they will be able to do you harm, but they still won’t be able to do actions that require root privileges. Therefore Podman is considered safer than Docker.

Building images

Docker is a self-sufficient platform. It can build images and run containers on its own without the need for any other third-party tool.

Podman, on the other hand, is only designed to run containers and not build them. That’s where Buildah comes in. Buildah is an open-source tool that makes building Open Container Initiative (OCI) container images.

Podman, with the help of Buildah, can build its OCI container images.

Docker Swarm and docker-compose

Docker Swarm is a container orchestration platform used to manage Docker containers. With the Docker Swarm, you can run a cluster of Docker nodes and deploy scalable applications without other dependencies required.

Docker Swarm can also manage multiple containers across multiple hosts and connect them all. Docker Swarm obviously can be used with Docker right out of the box without any problem.

Podman does not support Docker Swarm, but other tools can be used with Podman, such as Nomad, which comes with a Podman driver.

Docker-compose is a tool to manage applications with multiple containers. The main difference between Docker compose, and Docker swarm is that docker-compose runs only on a single host while Docker swarm connects various hosts.

Docker automatically is compatible with docker-compose and can work well with it right out of the box.

In the previous versions of Podman, Podman did not have a way to simulate the Docker daemon necessary for Docker compose to work, so that’s why Podman compose was used.

Now, in version 3.0, Podman introduced the podman.socket, a UNIX socket that replaces the Docker Daemon.

All in one vs modular

Podman has a modular approach, relying on specialized tools for specific tasks, while Docker is a monolithic independent tool. Docker is a monolithic, powerful independent tool. By monolithic, it means that Docker does not rely on any other third-party tool to manage containers, run, build or handle any other task related to the containers.

This one is a significant difference between Podman vs Docker technologies.

As we discussed, Podman relies on many additional third-party tools to achieve the same goals as Dockers. For example, it uses Buildah to build container images, while Docker does not need Buildah or any other third-party tool to build images.

Here’s the summary of the Podman vs Docker comparison.

| DOCKER | PODMAN |

| Uses a Daemon. | Has a daemon-less architecture. |

| It can only run with root privileges. | Able to run root-less. |

| Less secure since all containers have root privileges. | More secure since containers don’t have root privileges. |

| Docker is a self-sufficient tool and builds images on its own. | Podman can not build images by itself. It uses another tool called buildah to build images. |

| It supports the Docker swarm, and Docker compose right out of the box. | It does not support the Docker swarm or Docker compose. |

| Docker is a monolithic independent tool. | Podman relies on other third-party tools. |

Since we have learned about Podman vs. Docker comparison, is it safe to consider Podman a replacement for Docker? Let’s check this in the next section of this Podman vs Docker blog.

Is Podman a replacement for Docker, or can they work together?

Some developers use Podman for production and Docker for development. Since they’re both OCI compliant, compatibility won’t be a problem. However, it’s better to compare Podman vs Docker before moving ahead.

You can use Docker for development to make your work easier and use Podman for production to benefit from the added security it provides and make your application more efficient.

Podman can be a primary containerization technology option if you start a project from scratch. If the project is ongoing and already using Docker, it depends on the specifics, but it might not be worth the effort. As a Linux native application, it demands Linux skills from the developers involved.

How to migrate from Docker to Podman?

Migrating from Docker to Podman could be quite easy and simple to implement. The same commands you use to use Docker can also be used for Podman, so you don’t have to worry about memorizing new commands, which makes migrating really easy.

Some developers just alias Docker to Podman, and everything works quite the same, but the processes are handled by Podman instead of Docker.

Running a Node.js container with Podman

First, you must have Podman installed on your computer. If you want to follow along with this short tutorial, you can download the sample node.js application from this GitHub repository.

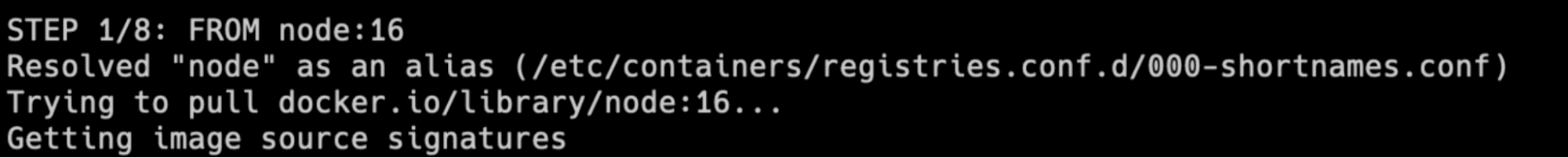

Since both Podman and Docker comply with the OCI container standard, Podman can also build containers from a Dockerfile, so if you can build and run a container from a Dockerfile with Docker, then you can run it with Podman as well.

Let’s build the container on this Dockerfile:

FROM node:16

# Create app directory

WORKDIR /usr/src/app

# Install app dependencies

# A wildcard is used to ensure both package.json AND package-lock.json are copied

# where available (npm@5+)

COPY package*.json ./

RUN npm install

# If you are building your code for production

# RUN npm ci --only=production

# Bundle app source

COPY . .

EXPOSE 3000

CMD [ "node", "server.js" ]

To build the container, run the following command:

podman build -t my-node-app .

After you run the command, Podman will execute the Dockerfile and build the image in your local machine.

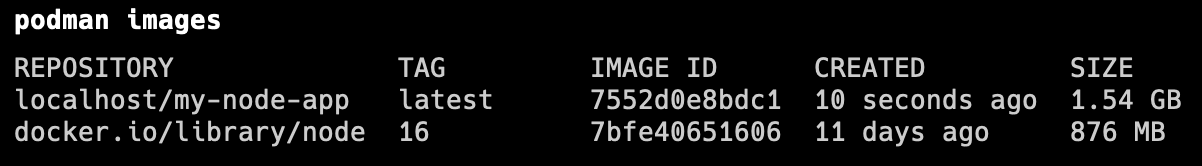

You can show the container images available on your local device by running podman images.

We only created one image called my-node-app, but if you notice, we have another additional image since the container that we are trying to run relies on node:16. Podman will automatically download that image to run the container that we want to run.

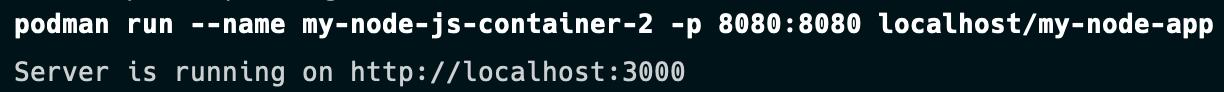

After you have built the image, you can then run an instance of this by running a container using the following command:

podman run --name my-node-js-container -p 3000:3000 localhost/my-node-app

The --name tag will specify the name of the container, -p will map the port inside of the container to the port on the host machine -p host:container.

Finally, add the localhost/my-node-app at the end to specify the container image we want our new container to run on.

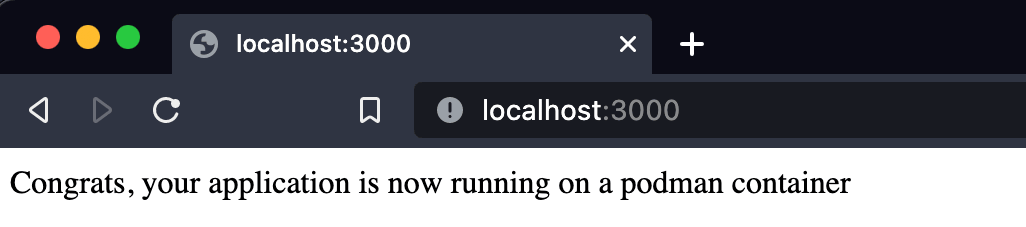

Congratulations, your server is now running on port 3000 inside a container run by Podman. You can access it from your browser.

All the commands we used in this tutorial were the same as Docker commands. That’s why migrating from docker to Podman is easy, and developers get used to it effortlessly.

Another cool feature about Podman is that you can stop or remove all containers using the —-all flag. With Docker, you could not have used that.

For example, if you want to stop all containers with Podman, you can run podman stop —-all. If you’re going to do the same thing with Docker, you have to run docker stop $(docker ps -a -q), which is a bit difficult to memorize.

Integrating Docker or Podman with Selenium Grid is easy and helps you perform Selenium automation testing. However, the capabilities offered by the Selenium Grid with Docker or Podman can be further enhanced by integrating an online Selenium Grid like LambdaTest.

[% youtu.be/WZlsHlReRww ]

You can also Subscribe to the LambdaTest YouTube Channel and stay updated with the latest tutorials around Selenium testing, Cypress E2E testing, CI/CD, and more.

LambdaTest is a cross browser testing platform that allows you to perform manual and automated browser testing of websites and web apps on an online browser farm of 3000+ browsers and operating systems combinations.

LambdaTest’s reliable, scalable, secure, and high-performing test execution cloud empowers development and testing teams to expedite their release cycles. It lets you run parallel tests and cut down test execution by more than 10x.

A comprehensive UI testing tutorial that covers what UI testing is, its importance, benefits, and how to perform it with real-time examples.

Conclusion

In this Podman vs Docker blog, we have seen that both Docker and Podman are amazing in running and managing containers. You can build and deploy large-scale applications with both of them.

If you are more concerned about security in your applications or planning to use Kubernetes to orchestrate your containers, Podman is the better choice for you.

If you want a well-documented tool with a much larger user base, then Docker is the better option for you.